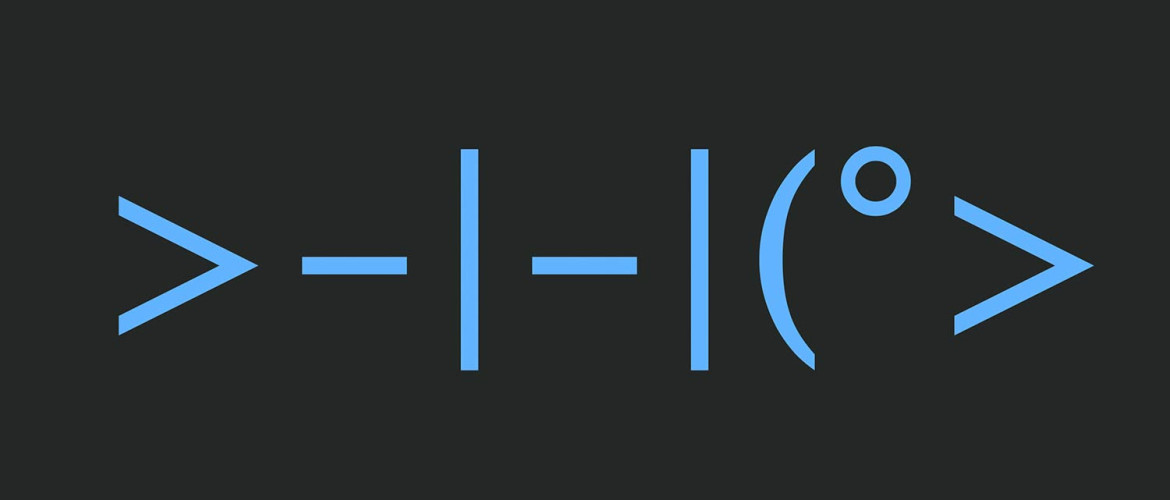

GIN is a fast, low-latency, low-memory footprint, web JSON-API framework with Test Driven Development helpers and patterns. It is a framework that I open sourced some time ago, as an experiment.

It all started when I heard so many good things about Lua that I wanted to see it in action and find a project where I could unleash its power. Being an API fan, it came natural for me to build a JSON-API server framework. And GIN was born.

GIN is currently in its early stage, but it already enables fast development, TDD and ease of maintenance. It is helpful when you need an extra-boost in performance and scalability, since it is entirely written in Lua and it runs embedded in a packaged version of nginx called OpenResty. For those not familiar with Lua, don’t let that scare you away: Lua is really easy to use, very fast and simple to get started with.

Controllers

The syntax of a controller is extremely simple. For instance, a simple controller that returns an application’s version information looks like:

|

|

local InfoController = {} function InfoController:root() return 200, { id = 'my_api', description = 'An API server powered by GIN.' } end return InfoController |

The return statement specifies:

- The HTTP code 200

- The body of the response, which gets encoded by GIN into JSON as:

|

|

{ "id": "my_api", "description": "An API server powered by GIN." } |

Most of standard JSON-API paradigms are already embedded in GIN.

Continue Reading…