As some of you already know, I’m the author of Misultin, an Erlang HTTP lightweight server library. I’m interested in HTTP servers, I spend quite some time trying them out and am always interested in comparing them from different perspectives.

Today I wanted to try the same benchmark against various HTTP server libraries:

- Misultin (Erlang)

- Mochiweb (Erlang)

- Cowboy (Erlang)

- NodeJS (V8)

- Tornadoweb (Python)

I’ve chosen these libraries because they are the ones which currently interest me the most. Misultin, obviously since I wrote it; Mochiweb, since it’s a very solid library widely used in production (afaik it has been used or is still used to empower the Facebook Chat, amongst other things); Cowboy, a newly born lib whose programmer is very active in the Erlang community; NodeJS, since bringing javascript to the backend has opened up a new whole world of possibilities (code reusable in frontend, ease of access to various programmers,…); and finally, Tornadoweb, since Python still remains one of my favourites languages out there, and Tornadoweb has been excelling in loads of benchmarks and in production, empowering FriendFeed.

Two main ideas are behind this benchmark. First, I did not want to do a “Hello World” kind of test: we have static servers such as Nginx that wonderfully perform in such tasks. This benchmark needed to address dynamic servers. Second, I wanted sockets to get periodically closed down, since having all the load on a few sockets scarcely correspond to real life situations.

For the latter reason, I decided to use a patched version of HttPerf. It’s a widely known and used benchmark tool from HP, which basically tries to send a desired number of requests out to a server and reports how many of these actually got replied, and how many errors were experienced in the process (together with a variety of other pieces of information). A great thing about HttPerf is that you can set a parameter, called –num-calls, which sets the amount of calls per session (i.e. socket connection) before the socket gets closed by the client. The command issued in these tests was:

|

1 2 |

httperf --timeout=5 --client=0/1 --server= --port=8080 --uri=/?value=benchmarks --rate= --send-buffer=4096 --recv-buffer=16384 --num-conns=5000 --num-calls=10 |

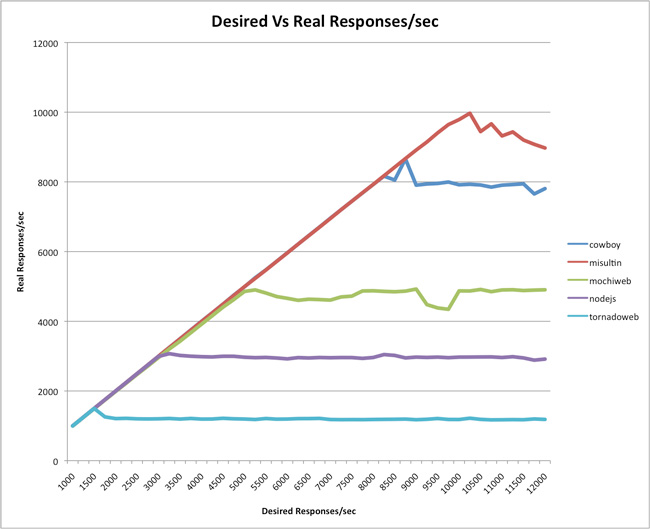

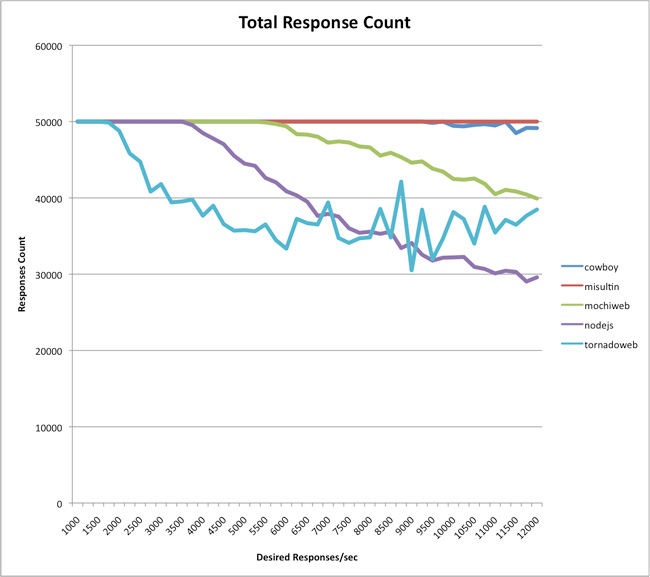

The value of rate has been set incrementally between 100 and 1,200. Since the number of requests/sec = rate * num-calls, the tests were conducted for a desired number of responses/sec incrementing from 1,000 to 12,000. The total number of requests = num-conns * rate, which has therefore been a fixed value of 50,000 along every test iteration.

The test basically asks servers to:

- check if a GET variable is set

- if the variable is not set, reply with an XML stating the error

- if the variable is set, echo it inside an XML

Therefore, what is being tested is:

- headers parsing

- querystring parsing

- string concatenation

- sockets implementation

The server is a virtualized up-to-date Ubuntu 10.04 LTS with 2 CPU and 1.5GB of RAM. Its /etc/sysctl.conf file has been tuned with these parameters:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Maximum TCP Receive Window net.core.rmem_max = 33554432 # Maximum TCP Send Window net.core.wmem_max = 33554432 # others net.ipv4.tcp_rmem = 4096 16384 33554432 net.ipv4.tcp_wmem = 4096 16384 33554432 net.ipv4.tcp_syncookies = 1 # this gives the kernel more memory for tcp which you need with many (100k+) open socket connections net.ipv4.tcp_mem = 786432 1048576 26777216 net.ipv4.tcp_max_tw_buckets = 360000 net.core.netdev_max_backlog = 2500 vm.min_free_kbytes = 65536 vm.swappiness = 0 net.ipv4.ip_local_port_range = 1024 65535 net.core.somaxconn = 65535 |

The /etc/security/limits.conf file has been tuned so that ulimit -n is set to 65535 for both hard and soft limits.

Here is the code for the different servers.

Misultin

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

-module(misultin_bench). -export([start/1, stop/0, handle_http/1]). start(Port) -> misultin:start_link([{port, Port}, {loop, fun(Req) -> handle_http(Req) end}]). stop() -> misultin:stop(). handle_http(Req) -> % get value parameter Args = Req:parse_qs(), Value = misultin_utility:get_key_value("value", Args), case Value of undefined -> Req:ok([{"Content-Type", "text/xml"}], ["no value specified"]); _ -> Req:ok([{"Content-Type", "text/xml"}], ["", Value, ""]) end. |

Mochiweb

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

-module(mochi_bench). -export([start/1, stop/0, handle_http/1]). start(Port) -> mochiweb_http:start([{port, Port}, {loop, fun(Req) -> handle_http(Req) end}]). stop() -> mochiweb_http:stop(). handle_http(Req) -> % get value parameter Args = Req:parse_qs(), Value = misultin_utility:get_key_value("value", Args), case Value of undefined -> Req:respond({200, [{"Content-Type", "text/xml"}], ["no value specified"]}); _ -> Req:respond({200, [{"Content-Type", "text/xml"}], ["", Value, ""]}) end. |

Note: i’m using misultin_utility:get_key_value/2 function inside this code since proplists:get_value/2 is much slower.

Cowboy

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

-module(cowboy_bench). -export([start/1, stop/0]). start(Port) -> application:start(cowboy), Dispatch = [ %% {Host, list({Path, Handler, Opts})} {'_', [{'_', cowboy_bench_handler, []}]} ], %% Name, NbAcceptors, Transport, TransOpts, Protocol, ProtoOpts cowboy:start_listener(http, 100, cowboy_tcp_transport, [{port, Port}], cowboy_http_protocol, [{dispatch, Dispatch}] ). stop() -> application:stop(cowboy). |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

-module(cowboy_bench_handler). -behaviour(cowboy_http_handler). -export([init/3, handle/2, terminate/2]). init({tcp, http}, Req, _Opts) -> {ok, Req, undefined_state}. handle(Req, State) -> {ok, Req2} = case cowboy_http_req:qs_val(<<"value">>, Req) of {undefined, _} -> cowboy_http_req:reply(200, [{<<"Content-Type">>, <<"text/xml">>}], <<"no value specified">>, Req); {Value, _} -> cowboy_http_req:reply(200, [{<<"Content-Type">>, <<"text/xml">>}], ["", Value, ""], Req) end, {ok, Req2, State}. terminate(_Req, _State) -> ok. |

NodeJS

|

1 2 3 4 5 6 7 8 9 10 11 |

var http = require('http'), url = require('url'); http.createServer(function(request, response) { response.writeHead(200, {"Content-Type":"text/xml"}); var urlObj = url.parse(request.url, true); var value = urlObj.query["value"]; if (value == ''){ response.end("no value specified"); } else { response.end("" + value + ""); } }).listen(8080); |

Tornadoweb

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

import tornado.ioloop import tornado.web class MainHandler(tornado.web.RequestHandler): def get(self): value = self.get_argument('value', '') self.set_header('Content-Type', 'text/xml') if value == '': self.write("no value specified") else: self.write("" + value + "") application = tornado.web.Application([ (r"/", MainHandler), ]) if __name__ == "__main__": application.listen(8080) tornado.ioloop.IOLoop.instance().start() |

I took this code and run it against:

- Misultin 0.7.1 (Erlang R14B02)

- Mochiweb 1.5.2 (Erlang R14B02)

- Cowboy master 420f5ba (Erlang R14B02)

- NodeJS 0.4.7

- Tornadoweb 1.2.1 (Python 2.6.5)

All the libraries have been run with the standard settings. Erlang was launched with Kernel Polling enabled, and with SMP disabled so that a single CPU was used by all the libraries.

Test results

The raw printout of HttPerf results that I got can be downloaded from here.

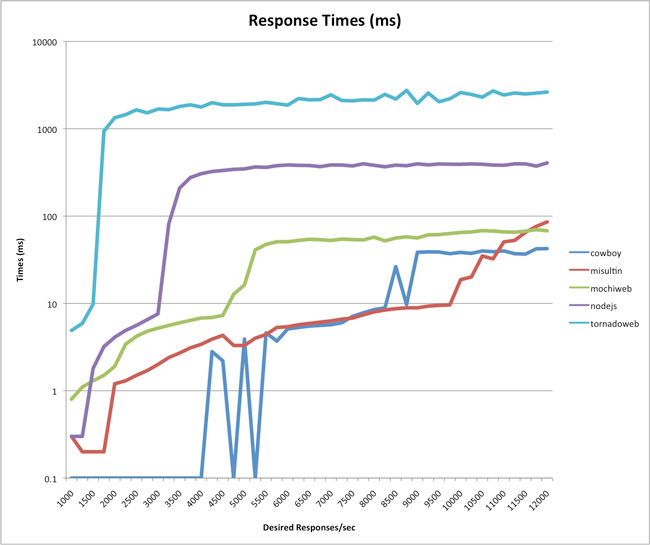

Note: the above graph has a logarithmic Y scale.

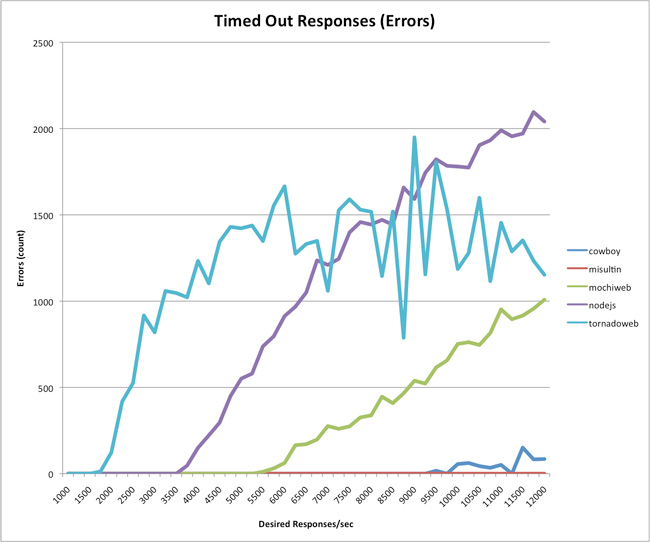

According to this, we see that Tornadoweb tops at around 1,500 responses/seconds, NodeJS at 3,000, Mochiweb at 4,850, Cowboy at 8,600 and Misultin at 9,700. While Misultin and Cowboy experience very little or no error at all, the other servers seem to funnel under the load. Please note that “Errors” are timeout errors (over 5 seconds without a reply). Total responses and response times speak for themselves.

I have to say that I’m surprised on these results, to the point I’d like to have feedback on code and methodology, with alternate tests that can be performed. Any input is welcome, and I’m available to update this post and correct eventual errors I’ve made, as an ongoing discussion with whomever wants to contribute.

However, please do refrain from flame wars which are not welcomed here. I have published this post exactly because I was surprised on the results I got.

What is your opinion on all this?

—————————————————–

UPDATE (May 16th, 2011)

Due to the success of these benchmarks I want to stress an important point when you read any of these (including mines).

Benchmarks often are misleading interpreted as “the higher you are on a graph, the best that *lib-of-the-moment-name-here* is at doing everything”. This is absolutely the wrongest way to look at those. I cannot stress this point enough.

‘Fast’ is only 1 of the ‘n’ features you desire from a webserver library: you definitely want to consider stability, features, ease of maintenance, low standard deviation, code usability, community, developments speed, and many other factors whenever choosing the best suited library for your own application. There is no such thing as generic benchmarks. These ones are related to a very specific situation: fast application computational times, loads of connections, and small data transfer.

Therefore, please use this with a grain of salt and do not jump to generic conclusions regarding any of the cited libraries, which as I’ve clearly stated in the beginning of my post I all find interesting and valuable. And I still am very open in being criticized for the described methodology or other things I might have missed.

thx for your benchmark!

may it be possible to include yaws to your list of benchmarked servers?

I have been wondering why you see 5-second timeouts. My guess is it happens in the overload situation where the server can not keep up with the barrage of incoming requests. In that case, requests are being queued up and that queue is growing at a faster rate than it can be dequeued.

Hence, at a certain point in time, reaching the front end of the queue takes more than 5 seconds and then the timeouts begin to occur.

I’d love to see a (vertical) histogram plot for each of the measured points. It would tell us more than the (average I presume) response time. Especially with respect to the timeout/queue hypothesis. Looking at your raw results, it is clear that there is a stddev and a median it could be interesting to plot against the average. It will tell interesting things.

What you may be experiencing is what I hinted at in a blog post some time back: http://jlouisramblings.blogspot.com/2010/12/differences-between-nodejs-and-erlang_14.html – in which a kernel density plot clearly shows an interesting difference in processing. I would believe both Tornadoweb and NodeJS may be experiencing this.

Mochiweb probably gets hit by the same problem after a while, if a request gets scheduled out for some time. In other words, the timeouts is a direct consequence of processing speed. The faster you can process your requests, the longer it will go before timeouts become apparent. Preemptive multitasking in Erlang gives a bit more flexibility here, but in the end it will fall prey to the same problem.

Very interesting plots there! Why are you surprised by the results, or more specifically what about them are you surprised by? I wonder if you profiled each one where the cause of the difference would lie.

In at least one case I’m certainly surprised, nodejs. Node uses a good deal of C, http parsing and socket handling is all done in C with some very well known fast libraries. That probably means something else is quirky there? Perhaps the number of connections allowed to be queued for accept is low by default?

Those numbers do not surprise me at all. Erlang’s raw performance is well known.

The surprise for me is how ugly the other framework/language examples are. If you’re using a high level programming language and it looks like unreadable garbage, why not go for something lower level?

Alas, programming isn’t just about generating raw speed. Here’s the same example in Sinatra: http://pastie.org/1881529

Notice, unlike how all the other examples look, this one is actually elegant and readable? Run it with rack and thin in production and it will get a lower spot on these benchmarks (hovering near Tornado perhaps), but that’s the point. I’ve sacrificed performance for readability and maintainability, which kindof the point of high level software IMHO.

I could produce a really low level Rack example that would be more illustrative of the raw performance there, and it would still be considerably more readable than all of those examples.

Hey Roberto.

Nice benchmarks, just wish I could investigate the apparent issues before you posted it. ;)

After looking at this all day I think I’ve figured out the reason Cowboy fails a little before misultin. Could you try Cowboy again on this setup with –rate=1200 and transport options [{port, Port}, {backlog, 1024}]? I’m fairly confident this is the only tweak required to prevent the Cowboy errors.

I can’t say about the requests/s, I do not get the same result here, Cowboy always outperforms misultin by about 1.5 times the reqs/s when the rate gets big enough.

Anyway I’ll probably increase the backlog by default, it’s not like we’re losing much by having a bigger one.

Thanks.

Problem is that you got 2 cpu on that server, Erlang will use both but V8 and CPython isn’t threading properly, so you’d want to put a load balancer in front of them(iptables will do) and balance the request to two instances to get “fairer” results.

krunaldo, Roberto said that SMP was disabled. “Erlang was launched with Kernel Polling enabled, and with SMP disabled so that a single CPU was used by all the libraries.”

I really like erlang. It offers a number of very interesting ideas. My only concern is that doing erlang well requires advanced understanding and lets face it there is a lot of code to be written out there and not everyone is going to want to spend the time to be an expert… now try to hire them.

Roberto: disregard my first comment, the backlog alone isn’t enough to improve it properly, I also need a max_connections. I’ll default both of them at 1024 as it gives me very good stability in default settings. I’ll email you when I’m done with those changes.

Richard: Erlang requires very few thoughts to make a good distributed app compared to the equivalent in any other language. It just takes a while to learn and adapt, it’s not that complicated past that point.

How about Netty, MINA or Grizzly?

@Kyle Drake, though I do get your point, actually Erlang can be pretty good at this due to its pattern matching syntax. Look at the sinatra-style misultin example here and judge for yourself: http://code.google.com/p/misultin/wiki/ExamplesPage#REST_support

@Loïc Hoguin yes, my plan was to have you see them first, but unfortunately we currently are on a different timezone and didn’t want to wait a day :) anyway, this is an open discussion. Misultin and Mochiweb use a default backlog of 128, so were I to increase Cowboy’s backlog to 1024 it would only be fair to do the same on the other two libraries as well, don’t you think? You say that you get 1.5 times the requests, are you running the same tests here above and are getting all different results? I’ve received the email with max_connections’ implementations and I’ll try that asap, however I’ll reduce the backlog if you have set it to 1024 by default.

I published my comments on my blog:

http://steve.vinoski.net/blog/2011/05/09/erlang-web-server-benchmarking/

@Steve, replying here too for the sake of completion.

I can only agree to many of the sensible points you make.

Whenever I discuss about benchmarks with people around me I always start by stating that speed is only one of the ‘n’ things you want from a webserver, and these would include stability, features, ease of maintenance, a low standard deviation (i.e. a known average behaviour), and such.

And that’s precisely the point. There are specific situations where speed can seriously help, and it’s the kind of situations I’m often confronted to: small packets, fast application times, loads of connections. And that was the reason for me writing Misultin in the first place.

That’s trading features for speed, no secret in that. If it were to be a big application, probably the percentage of time used by any of these servers for header parsing / socket handling / query-string parsing would be only a minor part in the overall process that produces a response. Because of that, it is most probable that the different servers would flat down to more similar speeds on a big application. That explains why I am not interested in adding application “thinking time”, because that is, by definition, application-dependent, and I test that every time I release a specific app.

As a last note, I did not include Yaws for a simple reason: it’s a fully blown up server, the “old guy” is a feature-loaded unquestioned webserver, and because of that I simply thought that it would have been unfair to add it to the comparison. This really sounded to me like apple and peers, not a minor difference of the missing headers you are referring to. IMHO, of course, and I’ll be more than pleased to add Yaws to the next run which I might be called to do.

AFA contributing to OTP itself, I would be more than delighted and there are signs to try to get along on that.. I just believe it to be out of my reach: I wrote misultin simply because I needed it.

Keep up the good work,

r.

(note: I am far from knowledgeable in web programming and network programming, so take this with a grain of salt).

As far as tornado is concerned, and I would guess NodeJS as well, I don’t think you are measuring anything else than the “language” speed, and what you do in the actual request contributes to almost nothing in terms of CPU case in the tornado case. As soon as you are in the get handler, you are only concatenating a few short strings together, and python can certainly do that more than 2000-3000 times / sec.

It would of course needs similar benchmarks/setup to actually compare, but haskell-based web frameworks can do one order of magnitude more than the numbers you give (http://snapframework.com/blog/2010/11/17/snap-0.3-benchmarks). This would confirm that you are only really comparing the language implementation more than anything else.

Hi,

Looking at your Node code it seems like you aren’t doing anything at all to use both CPUs (or cores). Since Node process are single threaded typically people use a library like Cluster (http://learnboost.github.com/cluster/) to pre-fork and distribute load amongst several processes to make use of multiple cores. Depending on the specs of the virtual server that could increase Node’s performance 2-4x.

Tom: “Erlang was launched with Kernel Polling enabled, and with SMP disabled so that a single CPU was used by all the libraries.”

All his webserver setups are using only one core so he could use the second to launch the tests.

@Roberto: yeah backlog of 128 might be fine on your environment. It isn’t on my laptop though, because it is very good hardware, and I was forced to increase the rate a lot before getting errors in the first place. So more requests at once, more needing queueing, etc.

By the way this and max_connections should be tweaked in all servers IMHO because you’re going to want them different depending on the hardware and the web server software to get the best results, which may be different values. Any sane sysadmin using these web servers would tweak the values for their needs. Defaults aren’t always geared toward high performance (see Apache for example).

Kyle:

sc:htstub(80, fun({_,Request,_}) -> “” ++ proplists:value(“value”, Request, “Screw sinatra”) ++ “” end).

With previous benchmarks showing gevent winning by some measures over tornado I feel it might have been a better representative of a fast python http server.

(see http://nichol.as/benchmark-of-python-web-servers )

I’d also like to see how these stack up to real speed-focussed webservers, like Zeus, Lightstreamer and so on.

Hi Roberto,

Congrats on creating a highly scalable web server! And nice work graphing the output also!

We also made an extremely fast server in haskell, and put up similar benchmarks against tornado and node:

http://www.yesodweb.com/blog/preliminary-warp-cross-language-benchmarks

Also note the benchmark against the fastest Ruby implementation (Goliath)- that should help to put some of the comments here in context.

It might be really interesting to benchmark Erlang vs. Haskell on a multi-core since they can both scale across multiple cores. Please contact me if you are interested in that for you next run- I can provide a program and haskell install instructions. Or we can do it the other way around if you want to add Erlang to our benchmark program.

Greg Weber

If you do intend to redo these benchmarks, then might I suggest you try Twisted too? Code:

from twisted.web import server, resource

class MainHandler(resource.Resource):

isLeaf = True

def render_GET(self, request):

value = request.args.get(‘value’, [”])[0]

request.setHeader(‘Content-Type’, ‘text/xml’)

if not value:

return ‘no value specified’

else:

return ‘%s’ % value

if __name__ == ‘__main__’:

from twisted.internet import epollreactor

epollreactor.install()

from twisted.internet import reactor

root = MainHandler()

factory = server.Site(root)

reactor.listenTCP(8080, factory)

reactor.run()

Sorry for the bad paste regarding the Twisted example above. Check out this gist: https://gist.github.com/974784

Why Cowboy using binaries, while Misultin and Mochiweb strings? At least in Mochiweb you can use binaries too.

Also why test with ulimit of 64K connections?

Let’s see if NodeJS and Tornado can scale to C1M problem ;)

I just submitted a pull request to fix these error cases with Node. The problem is the event loop gets starved by incoming connections stuck in an while (1) { accept() } loop. That causes the timeouts.

See: https://github.com/joyent/node/pull/1057 for details. The patch applies against Node 0.4.7 if you want to try again with the current release.

thank you matt, this is the perfect response and attitude towards these tests. i’ll do my best to try it out to give you some feedback, asap.

I just ran some profiling against the Node.js server, and it looks like a large majority of time is being consumed by the c buffer to V8 string conversion. This is probably the biggest bottleneck holding Node back in terms of performance in general.

To quickly (and probably poorly) sum the problem up: V8 is very smart about its internal string representation. This is great up until you need to either move a c string into or out of V8 (ie read from or write to a socket). To do this is a full mem copy of the string data.

Can’t say this is the only thing making node underperform, but it is a huge chunk.

@Roberto:

I have setup an easy environment for testing Perl based web servers, following your specification.

https://github.com/dolmen/p5-Ostinelli-HTTP-benchmark

Interesting comparison. I would love to know whether this was run on linux 2.6+ as the use of epoll vs select could have a big impact on the results. tornado, for example, falls back to select on older systems.

Very interesting; thanks for posting these results.

@olivier: interesting!

@chris: as stated it’s ubuntu 10.04 LTS up-to-date, so the answer is yes.

Thanks for results

Node.js, remove url.parse, should see a big uptick in performance.

Hi Roberto,

I’m new to erlang, Misultin, Yaws, Cowboy, WebMachine and MochiWeb (and everything else at http://www.chicagoboss.org/projects/1/wiki/Comparison_of_Erlang_Web_Frameworks).

“Let’s face it, the Erlang web development community isn’t large enough to support numerous web servers and frameworks.”

So which one? It would be nice for someone to include Yaws in this as it’s the most stable.

http://joeandmotorboat.com/2009/01/03/nginx-vs-yaws-vs-mochiweb-web-server-performance-deathmatch-part-2/ shows a yaws comparison to mochiweb allowing us to interpolate roughly between the two. (for just benchmarking)

hello :D

this looks nice but could you maybe do this benchmark again?

node has drasticly improved since 0.4.7 and still continues to improve in big steps.

i bet its time for node 0.8 to come out. 0.5 would already punch 0.4 away in terms of performance.

Any chance you could re-run these and include WebMachine?

A travis-ci.org + github repo setup that people could fork and contribute to would also be quite fab. :)

Thanks!

Ok. Ignore the WebMachine bit…as it uses Mochiweb.

The travis-ci.org + github repo idea still stands though. :)

What about memory usage, nodejs is pretty low on that

pretty surprising results — thanks for sharing the benchmarks; did you happen to also try out eventmachine?

i’m interested in seeing how ruby’s eventmachine fares with python’s tornado — i always thought nodejs would be better (overall) than python but it seems that tornado is slowly topping in terms of errors while node just seems to head its way up linearly on fault tolerance when under load. i wonder if eventmachine will top off like that of tornado :)

nodejs is faster but stress testing seems to speak for itself :D

Hi, I’m trying to run the experiments in the same way as yours.

But httperf crashes due to buffer overflow (

*** buffer overflow detected ***: httperf terminated) for both Cowboy (rate>=1125) and Mochiweb (rate>=975).It works well for Misultin for all rates till 1200.

Does it mean something wrong with httperf, or with Cowboy/Mochiweb?

Thanks!

Hi, finally I managed to run the experiments on a virtualized 32-bit Ubuntu. And I also noticed that in your article, “The total number of requests = num-conns * rate” should be “num-conns * num-calls”, right?

Great benchmark! It’s a bit dated at this point. Given this is the only real benchmark I could find, any chance you can reproduce it with latest versions? Specifically, NodeJS (and V8) have gone through major upgrades and its performance may change substantially.

Thanks!

This is a fantastic comparison, thank you!

I wonder how the performance looks like now, in 2020, since the libraries have matured and undergone quite a few iterations. Any chances for Round 2?

Kind regards,

Tammar

Yes, I am also interested in a relook. How do they stack up now? Any new contenders?